Client

Embedding

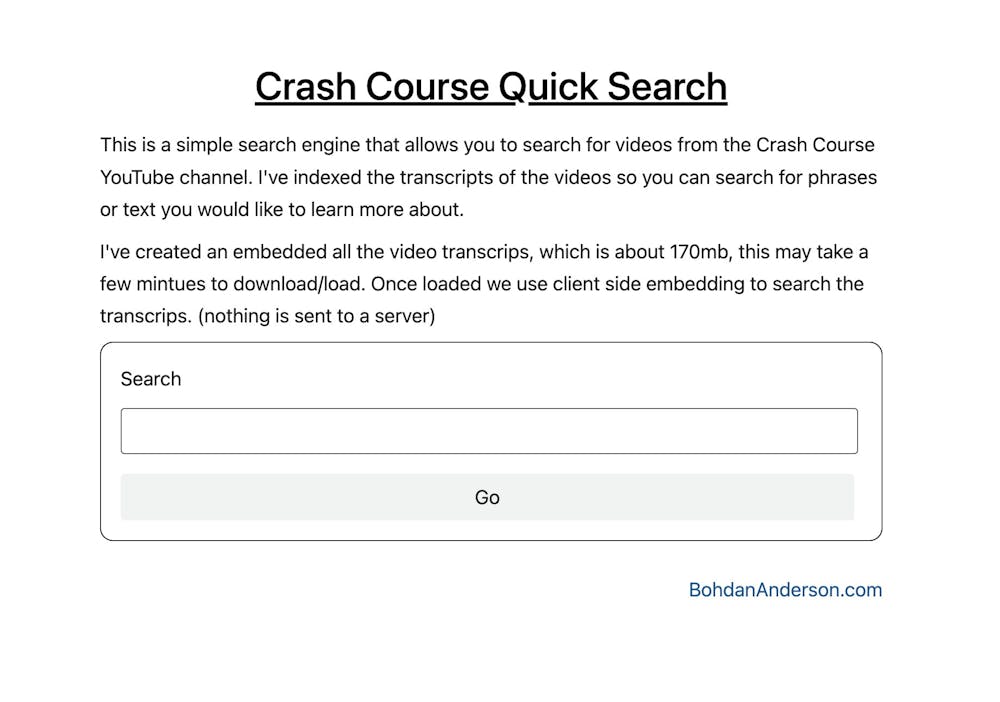

Can we create a vector database and embed it all on the client's local browser? Yes

I was setting out to see if this was possible.

The Vector search:

https://www.npmjs.com/package/vectra

This is a package that does vector search in node js. It loads the whole data set into RAM and then queries through it. Looking into how they do it, I just stole their distance function.

export const normalizedCosineSimilarity = (

vector1: number[],

norm1: number,

vector2: number[],

norm2: number

) => {

return dotProduct(vector1, vector2) / (norm1 * norm2);

};The Embedding:

https://huggingface.co/Xenova/all-MiniLM-L6-v2

Transformers made it much easier than previous uses of models in the browser.

const MODEL = "Xenova/all-MiniLM-L6-v2";

import { pipeline, env } from "@xenova/transformers";

env.allowLocalModels = false;

export default async function embeddings(input: string) {

const pipe = await pipeline("feature-extraction", MODEL);

const embedding = await pipe(input, { pooling: "mean", normalize: true });

return Array.from(embedding.data);

}This embedding model only has 368 vectors but that's more than enough for our usecase.

Since I was going to store a vector DB to send to the client, I halved the size of the vectors by limiting them to 6 digits after the decimal point.

The last time I used a model in the browser, it was one that I created my self and ended up making it into onnx.

The Data:

I like crashcourse and it helped me quite a bit.

From youtube got all the video links (which include their ids).

Array.from(document.querySelectorAll('a.yt-simple-endpoint.inline-block.style-scope.ytd-thumbnail')).map((x)=>x.href)Then, using Python YouTubeTranscriptApi, I got the transcripts for all the videos.

Then, I created embeddings with the same model and embedded them. I overlapped the embedding by two lines; each embedding was just eight lines from the transcript.

This ended up with a database of 178 MB, so I made a service worker cache it once it's been loaded ( I think it's working ). Queries against this DB with my m1 Mac take about 1-2s.

Next steps:

Different model? What difference does this make?d

Allow users to create their own data and load and edit local vector dbs.

Using service workers to not block js. ( just a performance change and I'm curious to see how it works).